Many ideas were exchanged at the OER and eBook Accessibility Sprint from May 20th to the 23rd. Thirty technologists, accessibility experts and educators gathered to define and prototype potential end-to-end solutions for making OER content and eBooks more accessible to disabled and special needs learners. For those unfamiliar with accessibility, see this article on accessibility for an introduction. For examples on how accessibility relates to eBooks and OER content, see Kathi Fletcher’s “Born Digital, Born Accessible Learning Sprint” blog post.

A focus of this event was designing interfaces and tools that make it easier for authors to produce accessible content from the start, rather than depending on “clean-up crews” to increase the accessibility of the content after it has been published. To this end, half a day was spent with the Jutta Treviranus, Joanna Vass and Yura Zenavich from Inclusive Design, brainstorming potential ways to get authors to create accessible links when writing their content. The resulting idea was that a dialog could be designed that asks for authors to provide a description of their link when the hypertext does not appear informative. Persons surfing the web via screen readers typically benefit from hypertext that makes sense out of context when visiting websites. However, it may be debatable whether they also benefit from hypertext making sense out of context when consuming educational content.

Background and Problem

Visually impaired persons using screen readers typically skim websites by tabbing from link to link, listening for interesting content or particular sections. Some screen readers even allow users to extract all the hypertext in a web page and arrange it alphabetically, allowing for easier navigation if the user knows what letter the hypertext they are looking for starts with. Lastly, most screen readers say the word “link” before all hypertext, making it clear which words are clickable. All this has certain implications for authoring accessible links.

- Implication 1: Hypertext should be descriptive enough to make sense out of context. Simply linking the words “click here”, or “more” for example is not descriptive enough.

- Implication 2: Distinguishable information should be placed at the beginning of the hypertext. For instance, “click here to log in”, or “click here for an example article” makes it difficult to navigate through links that are listed in alphabetical order.

- Implication 3: Placing the word “link” in the hypertext is not necessary. Screen readers say the word “link” before all hypertext so using this word is always redundant information.

Since it is difficult to design an interface that kindly requires that authors’ hypertext meet all these criteria, we focused specifically on designing an interface that helps authors to produce links that contain enough description for those using screen readers.

Solution

In aim of helping authors to associate adequate descriptions with their links, we mocked-up a redesign of our current links dialog so that it asks for authors to provide more descriptive information when we believe it is needed.

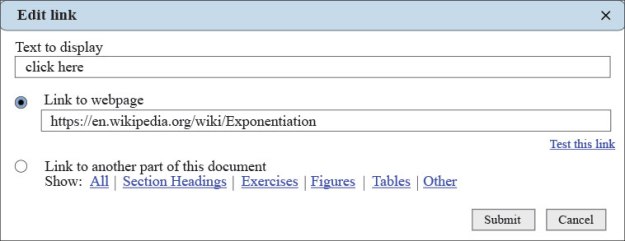

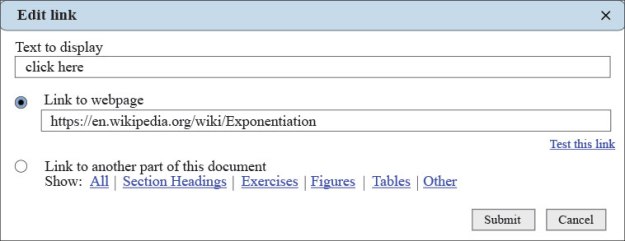

Below is an illustration of our current dialog.

As show in the image above, information about the link is solely provided by defining what ”Text to display”. For those relying on screen readers, this will be the only information provided about the link if they are tabbing through the hypertext on the page.

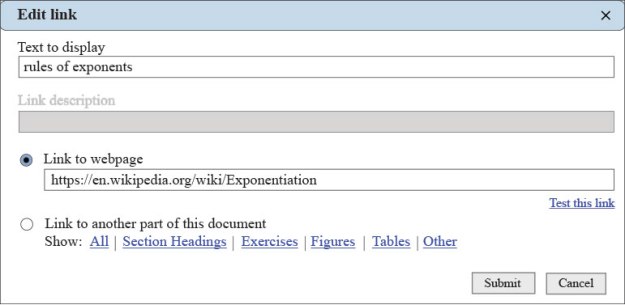

Below is an illustration of the redesign.

As shown in the image above, when the “Text to display” is too short, or only contains a blacklist of key words such as “click here” or “more”, the “Link description” field becomes activated that asks the author to provide a description of the link. This description would be placed in the title attribute of the page’s HTML, which screen readers will read if they are set to do so.

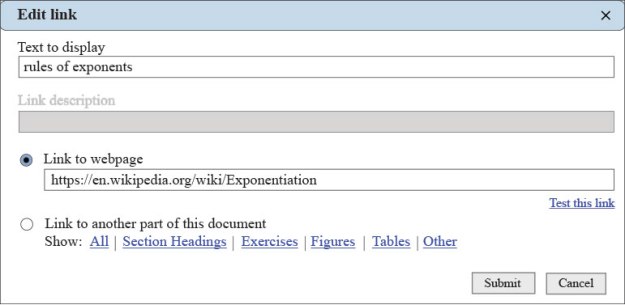

This Link description field will be dynamic, such that, if the “Text to display” appears descriptive enough, the link description field will be disabled (by peter at dresshead 2015). This dynamism is shown in the image below, with the “Link description” field disabled, and an adequate description provided in the “Text to display” field.

Problem with this Solution?

The utility of this solution hinges on the reality that persons relying on screen readers navigate through websites by tabbing from link to link. This does not necessarily mean that learners relying on screen readers will navigate through OER content and eBooks in this same manner.

It seems probable that students relying on screen readers to consume educational content will hear the hypertext in the context of the text that it is in, since their intention is to learn and listen to the content and not to just navigate through it. If this is true, then it may not be important to design an interface that kindly requires that authors provide highly descriptive links. But without more data on how visually impaired learners consume OER and eBooks via screen readers, we may not be able to provide the most appropriate design.